- Algorithms

- Invert

- Gamma

- Box Blur

- Sobel Edge Detector

How Invert works

Color inversion is when the luminance of the pixel gets mapped to the opposite. In other words, white gets mapped to black and black is mapped to white. If we assume an image with the color channels red, green, blue and 8 bits per channel, then the formula to map the luminance value is given by:

Iinvert(x, y) = Luminancemax - I(x, y)

where I is the image, Luminancemax is 255 for an image with 8 bits per color channel, and x and y are the coordinates of the pixel. Using the above formula, if you have the RGB pixel I(x, y) = (50, 120, 200) then Iinvert(x, y) = (205, 135, 55).How Box Blur works

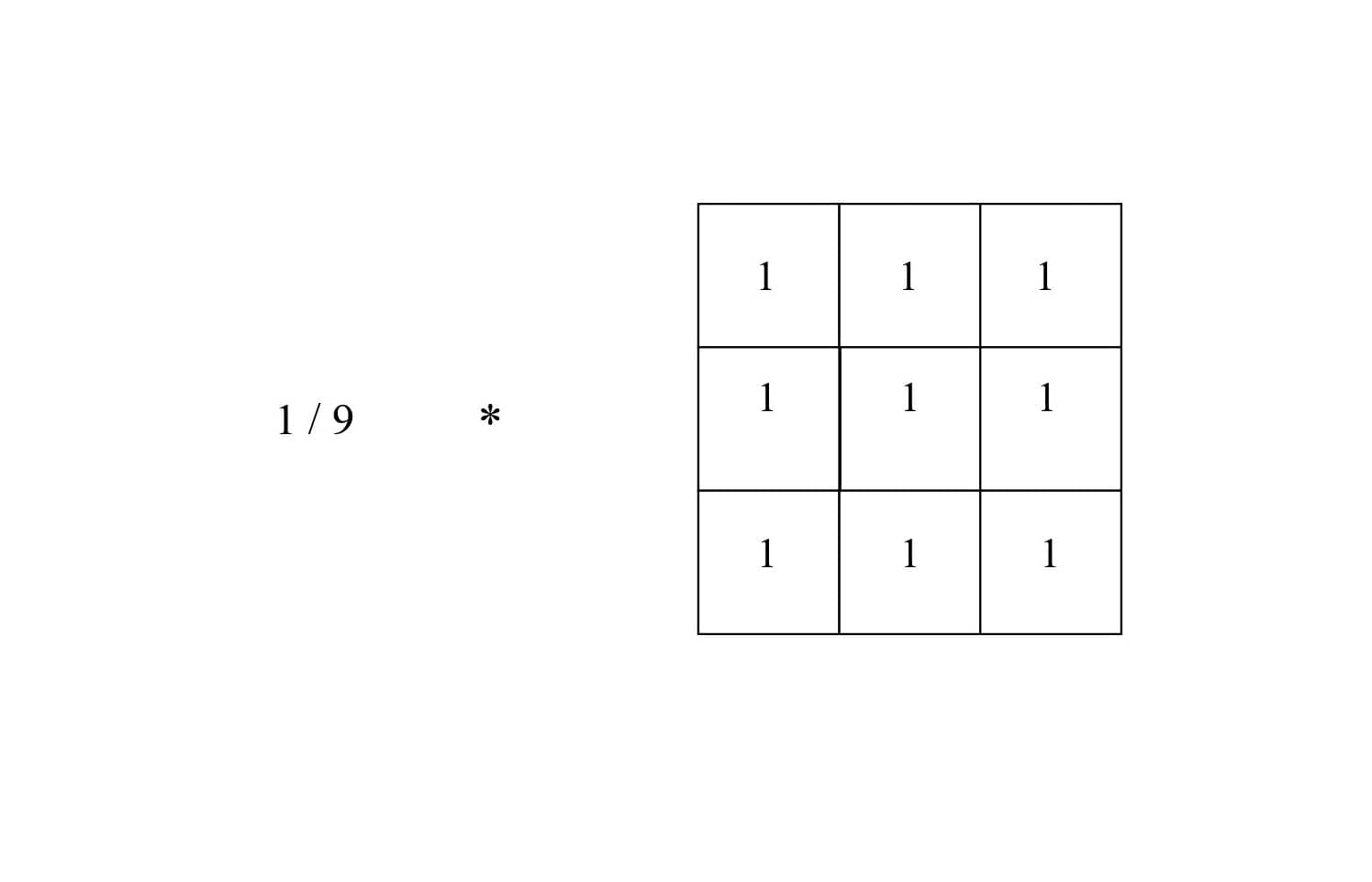

Box blur is a convolution filter that blurs an image. It is also known as a mean filter. What it essentially does is take the average of the pixel it is centered on and all of the neighboring pixels within the filter's radius. Then it uses the averaged value for the blurred image.

The kernel used for the box blur convolution filter in image processing comes in the form of an nxn matrix divided by the scalar value of n * n where n is the diameter of the kernel in pixels. For example:

3x3 Box Blur Kernel

The above kernel is 3 pixels wide by 3 pixels high. Each square overlaps a pixel on the image. The kernel can be any diameter from 1 to ∞; larger kernels create a stronger blur. They are odd in size because they need to be centered on a pixel.

Heres a demo of how box blur kernel convolves with an image:

Short demo of 3x3 box blur. The right matrix is the original image and the left matrix is the result of the convolution of the box blur kernel and the right image.

The kernel starts at the first pixel of the image at the top left corner, with the value of 25. It continues by sliding to the right and calculating the average of each pixel within the kernel window at each pixel until the end of the first row then moves down to the next row.

How Gamma works

Gamma, otherwise known as gamma encoding and decoding, or gamma correction, refers to expanding or compressing an image's range of intensity values. When an imaging system captures an image the light intensity information is captured linearly. In other words, if the light intensity is recorded by the camera with a value of 100, then 200 would be the equivalent of twice the brightness of 100. However, the human vision system perceives light nonlinearly. We are more sensitive to darker tones than lighter tones. Gamma encoding takes advantage of our perception of light intensity by transforming the linear output of digital imaging devices into a perceptually uniform scale.

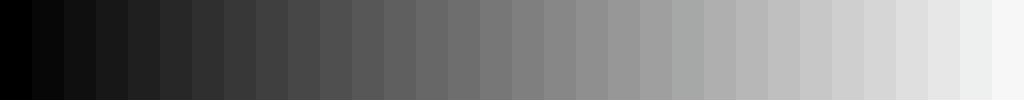

An example of perceptual brightness vs linear brightness differences is shown by the diagram below:

Perceptually linear image

Linear image

The above images show the effect of an encoded image and an image without gamma encoding. The transformation for gamma encoding is:

Iencoded(x, y) = Ilinear(x, y)1/γ

where γ is the gamma value, x and y are the pixel coordinates, and I is the image.

The difference in value between each shade for the perceptually linear image isn't the same; however, the delta between each shade on the linear image is the same. However, the linear image doesn't appear to have a natural progression between each shade while the perceptually linear image does. The difference between each shade looks more uniform in the perceptually linear image because of our nonlinear vision system. When being displayed the monitor and GPU apply another gamma transformation, which is supposed to effectively undo the gamma encoding already applied to image. This is known as gamma decoding. The transformation used for gamma decoding is given by:

Ilinear(x, y) = Iencoded(x, y)γ

The monitor expects a gamma to be applied to the image before being displayed. The reason why the perceptually linear image looks uniform is because the gamma applied by the display devices closely approximates the perceptual uniformity the human vision system expects. For most display devices, the gamma encoding they expect is given by:

Iencoded(x, y) = Ilinear(x, y)1/2.2

Then to display the image, the monitor will apply the gamma:

Ilinear(x, y) = Iencoded(x, y)2.2

to decode the image.

Why Gamma is Useful

Generally images are stored using 8 bit RGB channels.

Looking at the above linear and perceptually linear image, if 8 bits were used

for the linear images, then too many bits would be used to represent lighter values, while

too few would be used for darker tones, which humans are more sensitive too. In order to describe

more darker tones using linear gamma encoding, one would have to use 11 bits to get sufficient

representation to avoid image posterization.

In other words, 11 bits has to be used in order to get smooth gradients in the image, which are

important for things like skies. However, if images are stored using 8 bit gamma encoded values,

then it's possible to get smooth gradients using only 8 bits because gamma encoding redistributes

tonal levels closer to how our eyes perceive them

. Gamma encoding helps describe images using fewer

bits, thus using less storage and memory.

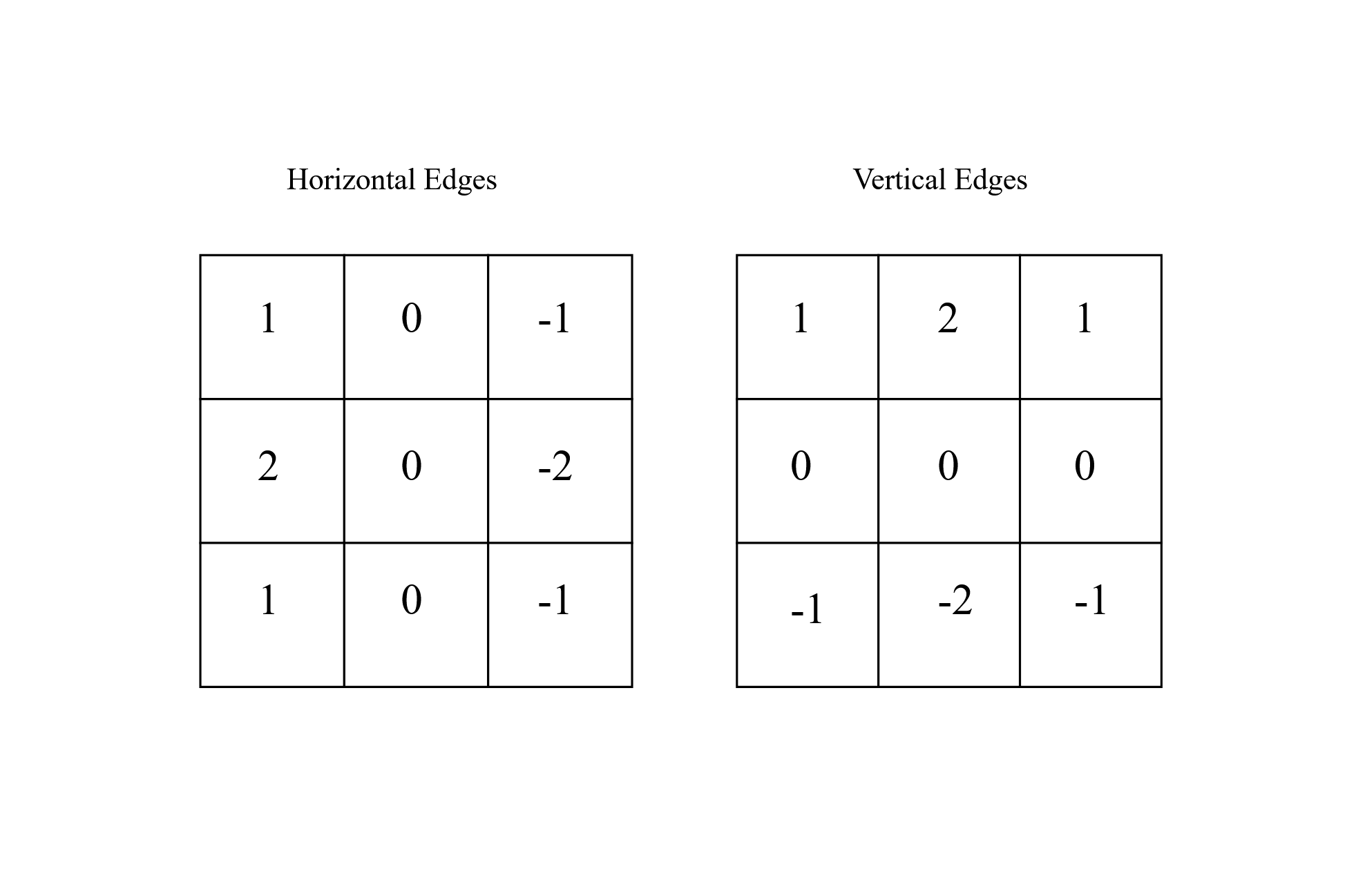

Sobel Edge Detector

The Sobel edge detector is one of the more basic edge detectors used in image processing. The goal of an edge detector is to find areas of an image where there are abrupt brightness changes as well as abrupt color changes. Most edge detectors primarily work with brightness changes because it's easier to work with grayscale images, that only show luminance, than color images. Color images require a little more work because two colors might be different and form an edge between each other, but have the same brightness. Which means they wouldn't be detected by popular edge detectors like the Canny edge detector and Sobel.

The Sobel operator finds edges by using two 3 x 3 convolution kernels to find the difference between two regions of an image. Finding the edges of an image is broken into two steps. One operator goes through the image to find the vertical edges and another is used to find horizontal edges. The two kernels are:

The left kernel finds horizontal edges

The right kernel finds vertical edges

An edge is essentially described as the difference in intensity between two areas. The horizontal edges are found by subtracting the right half of the kernel from the left half of the kernel as it passes over the image. The vertical edges are found by subtracting the bottom half of the kernel from the top half of the kernel. Finally, the two different results for the vertical and horizontal edges are combined together using the formula

I(x, y) = sqrt((Ih(x, y))2 + (Iv(x, y))2)

Where Ih is the horizontal edge image, Iv is the vertical edge image, x and y are the pixel coordinates and sqrt() is square root.

Horizontal edge image

Vertical edge image

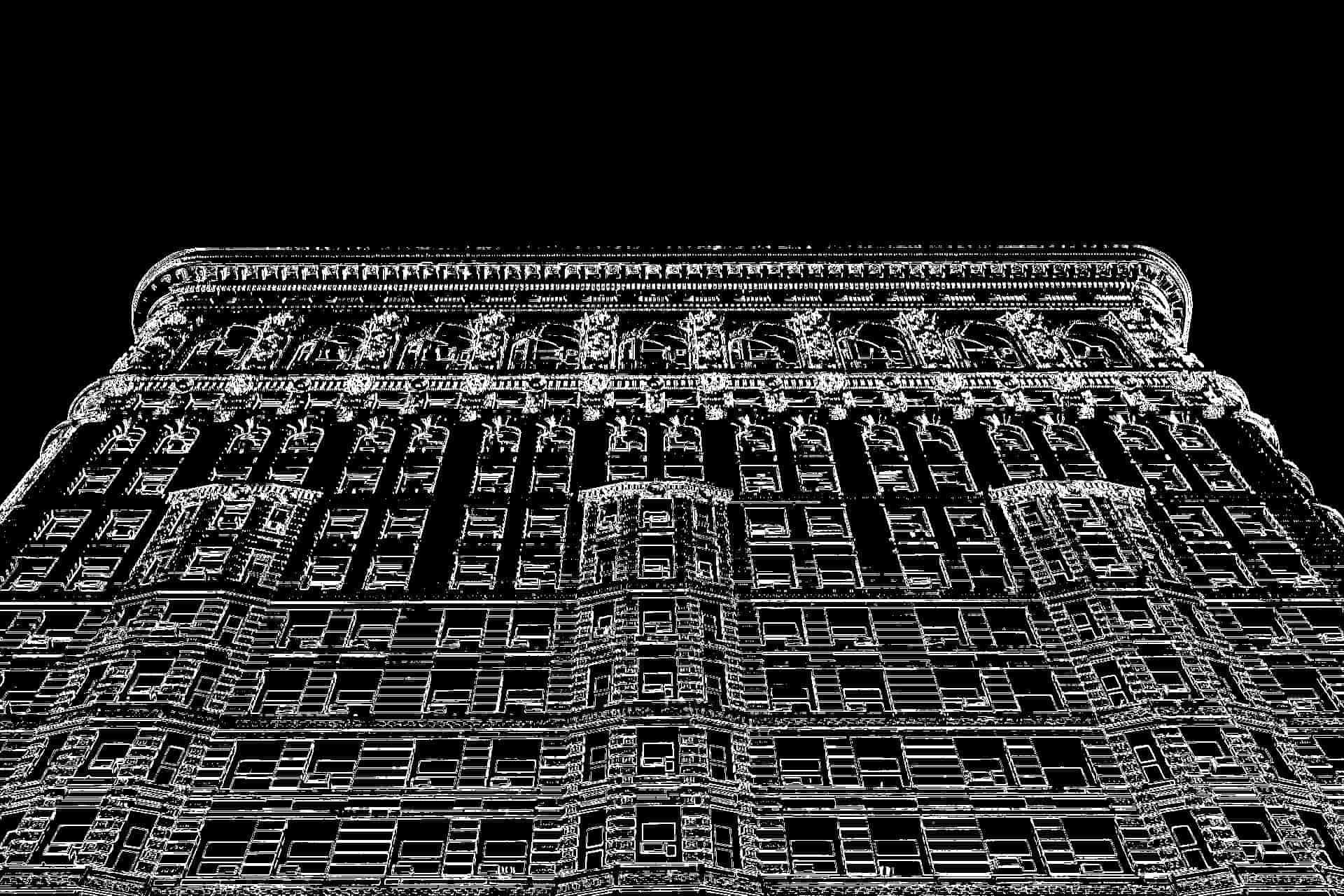

Once the two edge images are consolidated a threshold is applied to the image. The threshold is used to decide whether the difference between two areas is enough to determine that it is a strong edge. Thresholding is useful for preventing image noise from showing up on the final edge image. Lower thresholds will show more edges but will also show more noise while a higher threshold will show less noise but will also show stronger edges. Determining the ideal threshold is dependent on the application and the amount of noise already found in an image. The threshold used for the example assumes an 8 bit image and has a range of 0 to 255. When thresholding an image, if the pixel of the final edge image has the brightness of 200 and the threshold is 100 then that pixel is usually given max brightness because it's above the threshold. Anything below that threshold is given a brightness of 0. This makes it easier to see the edges. I went for an inverted thresholding because it looks like a sketch.

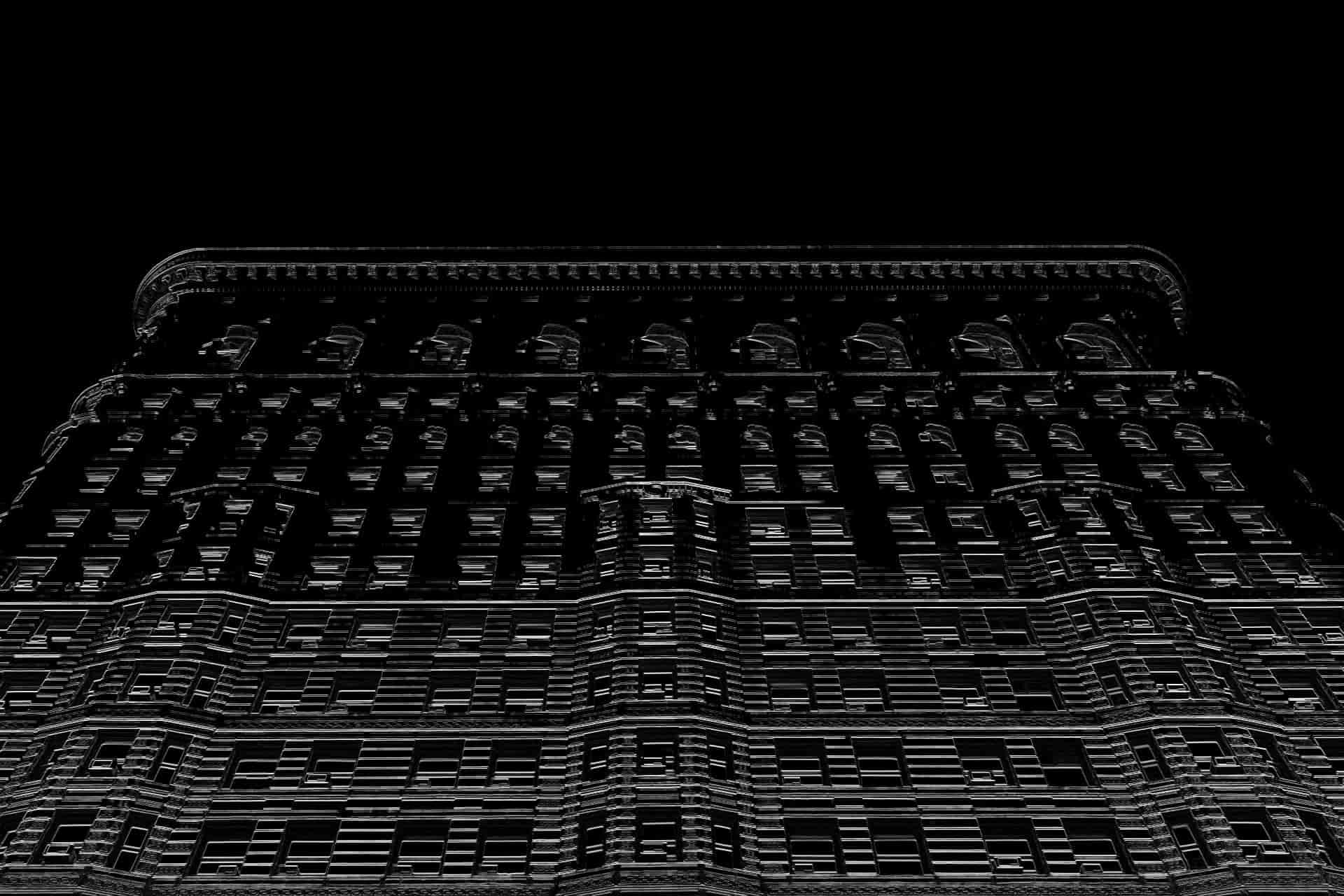

Final edges before thresholding

Final edges image after thresholding

To help with noise control and aid in edge detection, low pass filters or a median filter are usually used on images prior to edge detection. Low pass filters like Box blur and Gaussian blur can average away noise while maintaining most of the edge information of the image. A median filter are usually used for noise reduction and make a good preprocessing step before edge detection.